The Great AI Ecosystem Shift: From Chips to Infrastructure, What NVIDIA and Meta are Signaling

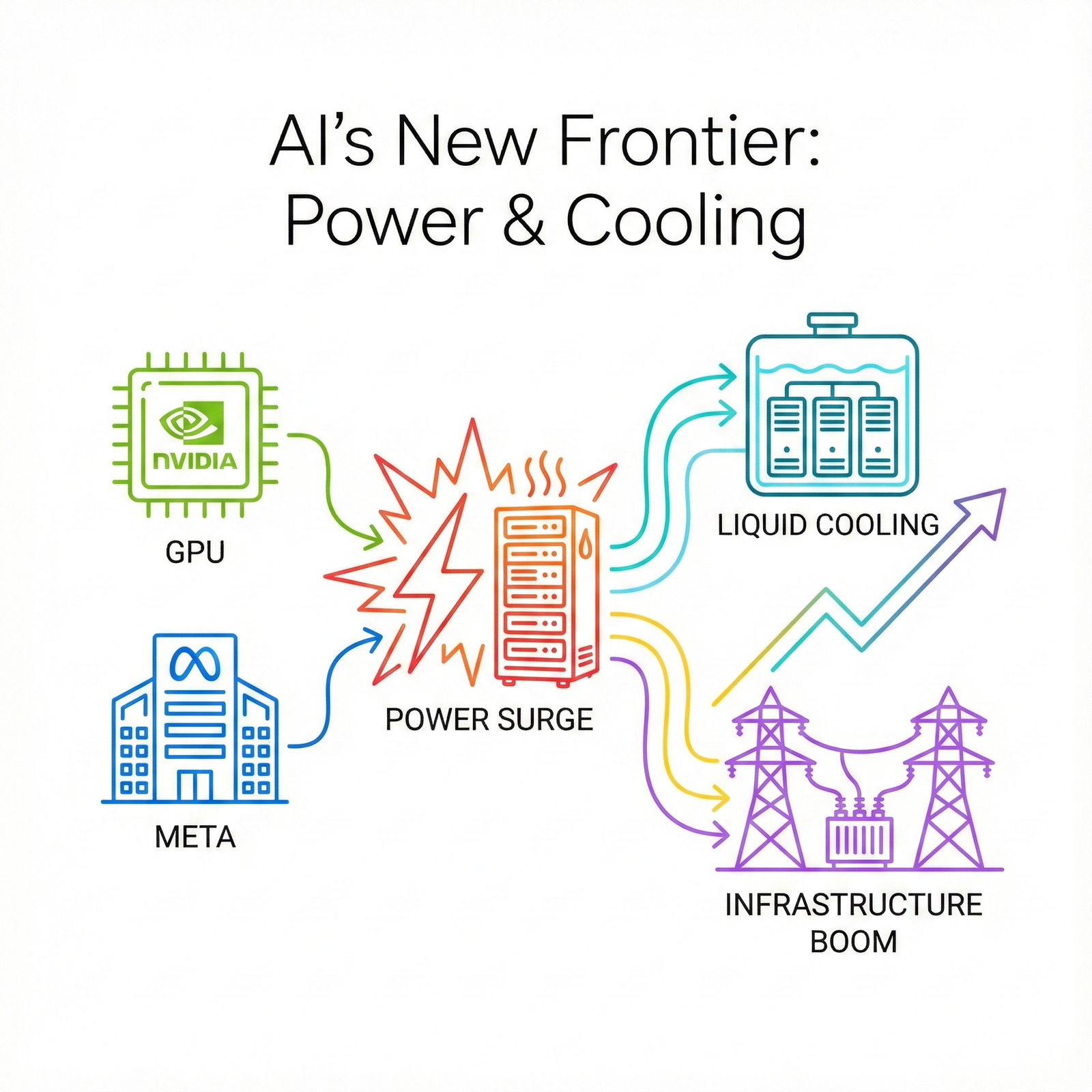

NVIDIA and Meta’s recent strategic moves indicate a major shift in AI growth: from chip competition to infrastructure build-out. We deeply analyze key changes, including explosive data center power demand and the necessary cooling tech transition, focusing on the new structural trends in the AI era.

Key Takeaways

✔ AI Market Maturation: The core focus of AI growth is structurally shifting from chip performance rivalry to the infrastructure required to sustain the chips.

✔ NVIDIA’s Strategy Expansion: NVIDIA’s move to offer full rack-scale integrated solutions (e.g., NVL72) beyond simple GPU sales is a technical response to the power and cooling constraints within data centers.

✔ Explosive Power Demand: AI data centers require tens of times the power of traditional facilities, inevitably driving massive investment in North American power generation, transmission, and distribution infrastructure.

✔ Cooling Technology Transition: To manage the high thermal output of high-performance GPUs, Liquid Immersion Cooling (LIC) is emerging as the next standard, signaling structural growth in related solution markets.

The strategic shifts of leading companies in the Artificial Intelligence (AI) revolution are becoming a critical focal point in the U.S. tech market. The recent moves by pioneers such as NVIDIA and Meta clearly indicate that the essence of AI growth is moving beyond mere GPU performance competition into a new phase: the deployment of physical infrastructure capable of sustaining AI reliably and scalably.

Meta’s decision to pursue its own AI chip development while also considering Google’s AI chips (as reported in November 2025) reflects a structural need among Big Tech to diversify the supply chain and reduce reliance on a single chip provider. Simultaneously, these companies are pouring enormous capital into large-scale data center infrastructure expansion.

These latest developments hint at the technical and physical bottlenecks facing AI growth. This article aims to provide essential background information by offering an in-depth, data-driven analysis of the structural changes occurring in power grids and cooling systems—the critical next steps in the AI growth curve.

The Inevitable Consequence of GPU Scaling: NVIDIA’s Shift to Integrated Solutions

As the complexity and precision of AI models increase, the computational load handled by a single GPU chip has grown exponentially. The latest GPUs, led by NVIDIA, consume significantly higher power (Thermal Design Power, TDP) than traditional CPUs, which naturally results in massive heat generation within the data center.

- Exposure to Technical Limits: As chip performance advances, data centers are confronting the physical limits of existing power supply capacity and the cooling efficiency of standard air cooling systems. Failure to effectively dissipate this heat leads to GPU performance degradation and operational issues.

- NVIDIA’s Strategic Response: In addressing this technical hurdle, NVIDIA has expanded its business model beyond simple chip sales. It now offers ‘rack-unit’ solutions (e.g., NVL72) that integrate multiple GPUs, networking, power supply, and cooling systems. This shift demonstrates that infrastructure integration is crucial for achieving optimal GPU performance.

This trajectory suggests a structural change: the benefits of AI growth are expanding from the chip manufacturing technology itself to the ‘infrastructure ecosystem’ essential for stable chip operation.

The Power Crunch: Analyzing the Surge in AI Data Center Power Demand

The power consumption required by AI data centers is unprecedented compared to data centers from the previous cloud computing era. AI facilities are analyzed to consume 2 to 3 times more power per rack due to higher power density.

According to recent forecasts from the U.S. Energy Information Administration (EIA), the rapid expansion of data centers for AI and other computing services is projected to push total U.S. electricity demand to 4.186 trillion kWh in 2025, hitting an all-time high. This demand surge is already causing power grid overloads and delays in approving new data center projects in certain regions.

This structural increase in power demand provides a foundation for long-term growth in two key industries.

① Utilities and Power Supply Infrastructure

Utility companies that reliably supply power to regions where large-scale AI data centers are being constructed are direct beneficiaries. They secure long-term, stable power off-takers, contributing significantly to the sustainable revenue and profit stability of their businesses. Investment in massive generation and transmission infrastructure is an indispensable process for meeting this demand.

② Power Conversion and Transmission Equipment

In response to the power surge, major modernization projects—replacing aging grids and increasing capacity for ultra-high voltage transmission and transformers—are actively underway across North America and Europe. Consequently, manufacturers of power transformers and distribution equipment have secured order backlogs spanning several years, supporting the market analysis that this industry has entered a long-term super-cycle.

Thermal Management Innovation: Accelerating the Shift to Liquid Cooling Technology

Solving the high-density heat issue caused by concentrated high-performance GPUs is one of the most critical technical challenges in AI infrastructure deployment.

Traditional air cooling systems become inefficient as server rack power density increases, leading to huge energy consumption and high operating costs.

- The Rise of Liquid Immersion Cooling (LIC): LIC, a method where servers are directly submerged in a special dielectric coolant, is emerging as an innovative alternative. Reports indicate LIC can improve cooling efficiency by over 30% compared to air cooling and significantly reduce the data center’s total energy consumption.

- Structural Market Growth: Market research reports project that the global liquid cooling market, fueled by the explosive growth of AI data centers, will exceed an annual growth rate of 18.5% through 2030, potentially forming a new multi-trillion-won industry ecosystem.

This clearly demonstrates a structural move toward high-efficiency liquid cooling solutions becoming the standard for AI infrastructure.

Comparative Analysis: AI Structural Investment Positions (2024 vs. 2025)

The following table compares the structural changes in key investment areas as the AI industry matures.

|

Category |

2024 (Chip-Driven Growth Phase) |

2025 Onward (Infrastructure & Efficiency Optimization) |

Key Change Factor |

|---|---|---|---|

|

Growth Driver |

LLM development, GPU performance, early supply monopoly |

Commercial AI adoption, increasing demands for power/cooling efficiency |

Widespread Commercial AI Deployment |

|

Investment Focus |

AI Chip Manufacturers, CSPs (Cloud Service Providers) |

Power Infrastructure, Specialized Cooling Solutions, High-Efficiency DC REITs |

Urgency of Physical Bottleneck Resolution |

|

Demand Characteristics |

Short-term spikes in GPU orders (High Volatility) |

Long-term, multi-year planned infrastructure build-out (Low Volatility) |

Increased Long-Term Investment Stability |

|

Primary Risks |

Intensifying chip competition, technology obsolescence risk |

Infrastructure build delays, regulatory hurdles, environmental/NIMBY issues |

Physical Deployment Complexity |

Source: S&P Global Market Intelligence, EIA Data, Self-Analysis (December 2025)

The recent strategic actions of NVIDIA and Meta unequivocally illustrate that the next phase of AI development transcends technical superiority, focusing instead on overcoming physical and infrastructure constraints.

As AI model performance continues to advance, the efficiency of power supply and thermal management becomes the decisive factor in the success of the AI ecosystem. The record-breaking increase in data center power demand and the rapid shift towards liquid cooling technology observed across North America strongly suggest a structural and inevitable transformation within the AI industry.

Reference Links

- U.S. Energy Information Administration (EIA) – Annual Energy Outlook

- Meta Engineering Blog – MTIA v2 Announcement

- NVIDIA Technical Blog – GB200 NVL72 Architecture

- Goldman Sachs Insights – AI, Data Centers and the Coming US Power Demand Surge

- Grand View Research – Immersion Cooling Market Analysis